Overview of Hashtable implementation in Java

HashTable also known as Dictionary in some programming languages is a data structure that stores key-value pairs and allows efficient search and retrieval of values based on their corresponding keys, and provides efficient constant-time average case performance for operations such as insertion, deletion, and lookup.

Collision: It is possible for a hash function to generate the same hash code for two different keys.

Techniques to resolve collisions:

- Separating Chaining or Closed Addressing: In this approach, each array index is associated with a data Structure, typically a LinkedList, where key-value pairs that hash to same index are stored.

Note: There are other techniques, but this is widely used technique.

Techniques to reduce collisions:

- Using an effective hash function: A good hash function will distribute the keys uniformly across the array, avoiding mapping multiple keys to the same index. A hash function that generates well-distributed hash values will reduce the number of collisions and improve the performance of the HashTable.

- Resizing and Rehashing: When substantial portion/space of HashTable is consumed, then it’s vital to create a new table, do rehash, and reinsert entries.

It’s not entirely possible to eliminate collisions in a HashTable, as collisions are inherent part of this data structure.

Here is the pseudocode of HashTable

HashTable[] hashtable = new HashTable[16] // Is an array of buckets

function hash(key) {

// compute the index with given key

return index

}

function put(key, value) {

int index = hash(key)

hashtable[index] = value

}

function get(key) {

int index = hash(key)

return hashtable[index]

}

HashMap in java

-

java.util.HashMap in Java is a HashTable data structure implementation.

-

Terminology:

- Entry: An entry is a key-value pair

- Bin: Bucket is known as bin. Also, this is a slot where entries are stored. It’s the value at a specific array index.

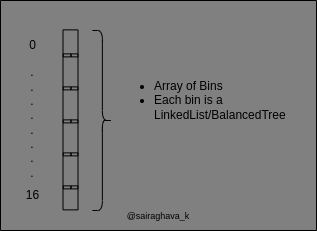

- Table: Array of bins is termed as Table. The underlying array used in HashTable implementation.

- Capacity: Size of the Table or total number of bins.

- Size: Total number of entries across all the bins.

- Load Factor: A measure of how much of map space is consumed. Example

0.75meaning 75% of map is filled with entries. - Threshold: Capacity X Load Factor. A key deciding factor for resizing table.

- Collision: When multiple keys upon hashing returns the same table index i.e., bucket/bin location.

- Treeification: The process of replacing LinkedList with Tree Structure to improve HashTable performance. This is introduced in Java8

- It’s an array of bins. Size of the array is 16 by default i.e., capacity of HashMap. DEFAULT_INITIAL_CAPACITY

- By default data in each bin is stored as a LinkedList data structure. LinkedList Implementation in HashMap

- size() returns the size of the HashMap. The property size of HashMap is updated every time an entry is added, updated or removed from the map.

- The default load factor is 0.75

- When is table resized?

- When the size of HashMap is greater than the threshold.

- Example Scenario:

-

Let’s create a HashMap with 2 entries.

-

Map<String, String> map = new HashMap<>(); map.put("January", "Boring"); map.put("February", "Fantastic");

-

size()of HashMap is 2- Default Values: {load factor is 0.75}, {capacity is 16}

- The

thresholdis a property in HashMap that will be computed asDEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY= 0.75*16 = 12. - The table will be resized when size/number of HashMap entries is greater than threshold i.e., 12 in this case.

if (++size > threshold)

-

- Resizing doesn’t just increase the table capacity/size, but it also does rebalancing(i.e., all the entries in old table are reinserted into new table with upgraded capacity) to effectively distribute entries.

- Note:

- A small load factor will result in fewer collisions and better performance, but will also result in larger table and higher memory usage.

- A larger load factor will result in more collisions and lower performance, but will also result in a smaller table and lower memory usage.

- When is LinkedList replaced with Balancedtree? Or, When will treefication kicks off? - This is introduced in Java8

- LinkedList is replaced with balanced Trees when BinCount(Size of LinkedList) is >= TREEIFY_THRESHOLD

- TREEIFY_THRESHOLD=8

- treeifyBin()

-

It will not treeify when table size/capacity < MIN_TREEIFY_CAPACITY instead it will resize the table.

- Otherwise, it will do Treeification.

-

- LinkedList is replaced with balanced Trees when BinCount(Size of LinkedList) is >= TREEIFY_THRESHOLD

- Java’s HashMap implementation has

hash()function that doesn’t directly return array index, instead Bitwise AND of this hash value and length of the array is performed to get the index. Here is how a specific index is resolved. -

Below is a sample example that demonstrates how the index of the array is computed.

-

// 1. Get the hash of the key Object key = new Object(); int hashCode = key.hashCode(); int hash = ((hashCode) ^ (hashCode >>> 16)); // 16 is the default capacity of HashTable // 2. Compute array index int index = ((array.length-1) & hash);

-

- To put it in a nutshell, Java’s HashMap implementation uses separate chaining technique to address collisions. In case if the number of elements in LinkedList exceeds a certain threshold, called the treeify threshold, the linked list is replaced with a balanced tree data structure like RedBlackTree for better performance.

References: